Understanding Isolation Levels in Galera Cluster

In an earlier article we have gone through the process of Certification Based Replication in Galera Cluster for MySQL and MariaDB. To recall the overview, Galera Cluster is a multi-master cluster setup that uses the Galera Replication Plugin for synchronous and certification based database replication. This article examines the concept of Isolation Levels in Galera Cluster, that is an inherent feature of the MySQL InnoDB database engine.

Monitoring Your MySQL Database Using mysqladmin

As with any other dynamic running process, the MySQL database also needs sustained management and administration of which monitoring is an important part. Database monitoring allows you to find out mis-configurations, under or over resource allocation, inefficient schema design, security issues, poor performance that include slowness, connection aborts, process hanging, partial resultsets etc. It is giving you an opportunity to fine tune your platform server, database server, its configuration and other performance factors. This article provides you an insight into monitoring your MySQL database through tracking and analyzing important database metrics, variables and logs using mysqladmin.

Choosing between AWS RDS MySQL and Self Managed MySQL EC2

The Amazon Web Service (AWS) products AWS EC2 and AWS RDS comes with sophisticated technology features and capabilities that serve the needs for Web based computing and storage efficiently. When coming to the decision making process for selecting between AWS RDS and the self managed AWS ECS or any independent MySQL DBaaS for your MySQL database needs, there are some prominent factors to consider. These factors are crucial and affect the operational and economic aspects of your database deployment. This article provides a comprehensive overview of both products to help you understand the distinction between them for making a suitable and cost-effective decision.

Read more

Securing MySQL NDB Cluster

As discussed in a previous article about MySQL NDB Cluster, it is a database cluster setup based on the shared-nothing clustering and database sharding architecture. It is built on the NDB or NDBCLUSTER storage engine for MySQL DBMS. Also the 2nd article about MySQL NDB Cluster Installation outlined installation of the cluster through setting up its nodes – SQL node, data nodes and management node. This article discusses how to secure your MySQL NDB Cluster through the below perspectives:

1. Network security 2. Privilege system 3. Standard security procedures for the NDB cluster.Detailed information is available at MySQL Development Documentation about NDB Cluster Security

Read more

How to install MySQL NDB Cluster on Linux

As discussed in this previous article about MySQL NDB Cluster overview, it is built on the NDBCLUSTER storage engine for MySQL and provides high availability and scalability. This article explains installation of MySQL NDB Cluster on Linux environment. The detailed documentation is available at the official MySQL Developer Documentation page.

Quest Software released Toad Edge to Simplify MySQL Database Development

Open Source database management systems are increasing their market share dramatically over the last couple of years. Market Experts like Gartner are predicting a market shift from commercial, closed-source DBMSs to Open Source DBMSs in the coming years. According to Gartner, by 2018, more than 70 percent of in-house deployments will be on open source DMBSs and 50 percent of existing RDBMS deployments will have been converted or start the process.

An Overview of MySQL NDB Cluster

The “Shared Nothing” Architecture and Database Sharding

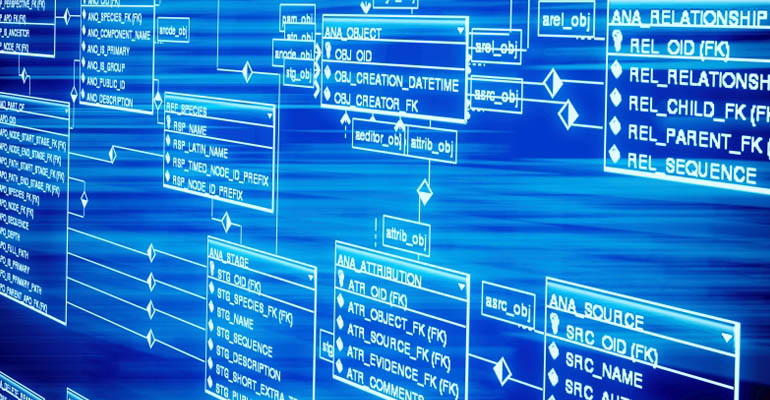

It is a distributed computing architecture with interconnected, but independent computing nodes with their own memory and disk storage that are not shared. Compared to a centralized controller based distributed architecture, the SN architecture eliminates presence of a “single point of failure”. An SN architecture offers great scalability by adding as many nodes as wanted. The data is distributed or partitioned among the different nodes that run on separate machines. To respond to user’s queries, some kind of coordination protocol is being used. This is also called database sharding, where the data is horizontally partitioned. A database shard that contains a partition will be holding on a separate database server instance to spread load and to eliminate failures. In horizontal partitioning, table rows are separated, rather than as columns in vertical partitioning or normalization. Each data set will be part of the shard that is distributed across different database servers and also at different physical locations, if needed.